Random Forest Tuning

Decision Trees can be improved by tuning their hyperparameters.

Limiting feature amount in the splits

1- n_estimators

This parameter can be used to define the tree amount in the Random Forest and is one of the most significant parameters in Random Forest Algorithm.

If performance is a strict criteria for your project you can always lower n_estimators to build a random forest with less decision trees. The good news is in most cases you can get very accurate results with less trees than default. n_estimators is assigned to 100 by default in Scikit_learn which is a rather high value which will be an overkill in most machine learning solutions with Random Forest algorithm.

Usually numbers around 10 give very good results while saving lots of computational resources. Optimum value will still depend on the project and dataset and you can easily experiment with a few values and use metrics evaluation to conclude the perfect fit for your project.

Here is an example Random Forest tuning in Python:

RF = RandomForestClassifier(n_estimators = 12)

RandomForest Specific

- n_estimators

- n_jobs

- oob_score

- bootstrap

- max_samples

- warm_start

- verbose

Shared with Decision Trees

- criterion

- splitter

- max_features

- max_depth

- min_samples_split

- min_samples_leaf

- class_weight

Limiting feature amount in the splits

2- n_jobs

n_jobs is a great parameter that will give you hardware level control.

It defines the amount of processors that can be used when random forest machine learning algorithm is running. It will parallelize training and prediction processes among trees based on the processor number defined here.

By default n_jobs is assigned to None which means only 1 processor will be utilized and there won’t be any parallelization.

You can specify the number as an integer to defined the processor amount to be utilized or you can also assign n_jobs to -1 which means all processors will be used. This can significantly boost performance when your random forest is more sluggish than you’d like.

Here is a Python code example:

RF = RandomForestClassifier(n_jobs=-1)

Limiting feature amount in the splits

3- oob_score

oob_score stands for out of bag score and it’s a useful cross validation technique that can be implemented in Random Forest algorithm. This parameter is False by default. If oob_score is True, out of bag samples will also be used for accuracy estimation in the model.

Random Forest algorithm works by training Decision Trees independently using bootstrap samples. Bootstrap samples are randomly chosen to train individual Decision Trees in the model. So during each Decision Tree training in the Random Forest training we will end up with samples that are outside the bootstrap, these samples are called out of bag samples.

oob_score is calculated by taking out of bag samples, making a prediction on them and then having it also predicted by other Decision Trees who didn’t include it in their bootstrap. Average of their prediction results will be called “majority vote” and initial prediction will be compared with majority vote to come up with an accuracy score. This cross validation method is called OOB Score Error. When it’s included in the Random Forest algorithm it will be used as a guide for estimating generalization of accuracy.

You can simply enable it using following Python code:

RF = RandomForestClassifier(oob_score=True)

Limiting feature amount in the splits

4- bootstrap

Bootstrap aggregation is a genius method used by Random Forests. A random sample (rows) will be taken to train each Decision Tree independently and prediction results of trees are averaged to come up with a consensus as Random Forest’s prediction outcome. Bootstrap aggregation is also known as bagging and it’s the signature move of Random Forest machine learning algorithm.

Benefits of bootstrap aggregation are reducing the possibility of overfitting (very useful feature) and reducing prediction variance (also very useful). It’s also likely to improve performance since a sample is being used for each Decision Tree training instead of the whole dataset.

But, if you’d like to turn off bootstrap aggregation and traing each tree with the whole dataset, this is also possible. Meet bootstrap parameter.

Turning off bagging doesn’t mean Random Forest will lose its randomness since Random Forests still use random features to make splits for each Decision Tree’s training. Plenty of randomness in Random Forests which helps .

If your sample size is too small, you might want to increase the size of training dataset by setting bootstrap to False which will result in training trees with the whole dataset.

In most cases though bootstrap aggregation is expected to yield more favorable results in terms of accuracy since taking random bootstrap samples to train Decision Trees on different samples adds more training variance and eliminates training on the same data repetitively.

It can be turned off using following Python code:

RF = RandomForestClassifier(bootstrap=False)

Limiting feature amount in the splits

5- max_samples

This is a nice additional parameter that Random Forests have. If bootstrap is True it can be used to limit the sample size instead if using the whole dataset. This will eliminate randomness from bagging but it’s an effective method to reduce sample subset size used for training trees.

Here is a Python code that reduces sample size by half using a float. You can also assign an integer to max_samples to directly define the maximum sample subset size. Default of this parameter is None.

RF = RandomForestClassifier(max_samples=0.5)

Limiting feature amount in the splits

6- verbose

Verbosity means wordiness and by setting verbose to an integer you can get more report output from training and prediction tasks of random forest algorithm while it’s working. Let’s see what output we get by setting verbose to 5.

RF = RandomForestClassifier(verbose=5)

Information regarding training phase of Random Forest:

building tree 1 of 100

building tree 2 of 100

building tree 3 of 100

building tree 4 of 100

building tree 5 of 100

Information regarding inference phase of Random Forest:

[Parallel(n_jobs=1)]: Done 1 out of 1 | elapsed: 0.0s remaining: 0.0s

[Parallel(n_jobs=1)]: Done 2 out of 2 | elapsed: 0.0s remaining: 0.0s

[Parallel(n_jobs=1)]: Done 3 out of 3 | elapsed: 0.0s remaining: 0.0s

[Parallel(n_jobs=1)]: Done 4 out of 4 | elapsed: 0.0s remaining: 0.0s

Limiting feature amount in the splits

7- warm_start

Another Random Forest specific parameter warm_start can be used to keep previously trained decision trees that belongs to a Random Forest model while having the ability to add new trees using new training process.

Attention though you will need to increase the tree amount in the forest by increasing n_estimators parameter since all the tree quota will likely be used in the previous training.

So, what’s the benefit of using warm_start in Random Forests? In some specific cases you might want to train the model with multiple datasets that are in the same format but still have some variation among them.

Imagine having surveys from multiple resources, or something like patient data or medical research from multiple hospitals, or it could be client data from multiple banks etc. in all of these cases it will be tremendously helpful to be able to observe effects and variation that each dataset might introduce to the machine learning model.

Here is a rough example in Python that can be used as a starting point for implementing Random Forests with warm_start parameter.

warm_start is set to False by default.

RF = RandomForestClassifier(n_estimators=100, warm_start=True)

#First Training

RF.fit(X1, y1)

print(RF.score(X_test, y_test))

RF.n_estimators += 100

#Second Training

RF.fit(X2, y2)

print(RF.score(X_test, y_test))

RF.n_estimators += 100

#Third Training

RF.fit(X3, y3)

print(RF.(X_test, y_test))

RF.n_estimators += 100

#Fourth Training

RF.fit(X4, y4)

print(RF.(X_test, y_test))

Limiting feature amount in the splits

8- max_depth

max_depth is the allowed tree depth and it will be a criteria for restricting split amounts when needed. It can be used to avoid overfitting or improve the performance and it applied to each tree in a random forest.

You can experiment with it to see what suits your data best, by default maximum depth is assigned to None meaning there is no limiting factor on the tree depth.

You can adjust it as in the Python code below.

RF = RandomForestClassifier(max_depth=3)

Limiting feature amount in the splits

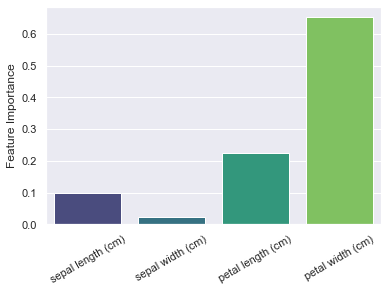

9- max_features

You can also make restrictions regarding the number of features that will be used in the decision trees. It can be used to tremendously improve performance when data is too dimensional. This will help solve scalability issues and decrease variance by introducing randomness to the model.

By default max_features is “auto” for Random Forests concerning trees that make them while for single Decision Tree algorithm max_feature is set to None by default meaning all features will be used for training.

Another benefit of max_features is you can discover the significance of features by tuning it to limit feature size. You can adjust max_features in Python with following code:

ps: It can take strings like sqrt, log2, auto as well as a string to directly define the limit on maximum feature amount. If assigned to None, randomness from selection of random features will be eliminated from the Random Forest and model will be more like a Decision Tree in that sense. It can be helpful for validation and testing.

RF = RandomForestClassifier(max_features="log2")

Limiting feature amount in the splits

10- min_samples_split

min_samples_split is 2 by default and it can be used to restrict splits. Splits won’t be allowed if they don’t include sample amount below the value defined here.

When you’d like to be more strict with your splits you can have a higher value such as 20 for example, and if any split has less than 20 samples it won’t be allowed.

This can help avoid overfitting and make the model more robust by eliminating splits without enough sample.

Example Python code for assigning it:

RF = RandomForestClassifier(min_samples_split=15)

Limiting feature amount in the splits

11- min_samples_leaf

This hyperparameter is 1 by default and it works with the same logic as min_samples_split except it concerns leaf nodes.

Leaf nodes below sample size defined here won’t be allowed.

RF = RandomForestClassifier(min_samples_leaf=5)

Summary

Random Forest is a very versatile and tweakable machine learning algorithm. Its hyperparameters are straightforward and it offers even more flexibility than Decision Trees. A Random Forest algorithm has all the hyperparameters a Decision Tree has and more.

In this Random Forest Tutorial, we’ve seen the most commonly used Random Forest parameters and multiple ways to tweak for better Random Forest Tuning & Optimization.

When you understand the parameters and hyperparameters of the machine learning model you are using you will have more power over the model and overall machine learning process. This will allow you to tweak the results to fit your exact needs and outclass the competition.