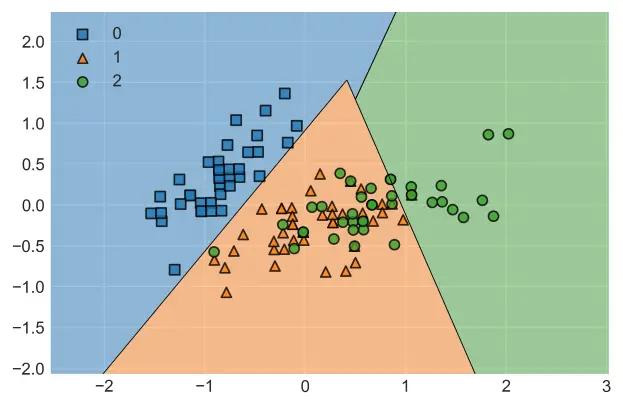

kNN Classification

KNeighborsClassifier can be used to solve classification problems as well as regression problems. It is not known to scale very well for big data but there are workarounds for performance issues and for its shockingly precise accuracy and intuitive inner-workings, kNN is still a commonly used machine learning algorithm.

In this tutorial we will look at KNeighborsClassifier and how it can be used via Python and Scikit-learn library.

How to Construct?

1- KNeighborsClassifier

You can use K-Nearest Neighbors implementation for classification problems using KNeighborsClassifier from sklearn.neighbors module.

a) Creating KNeighborsClassifier Model:

You can create a KNeighborsClassifier using Python and Scikit-Learn by using the code below:

from sklearn.neighbors import KNeighborsClassifier

KNC = KNeighborsClassifier()

Once the model is initiated it will be ready for training using training data.

b) Training KNeighborsClassifier Model:

You can use a code similar to the one below in Python to train a kNN classifier.

KNC.fit(X_train, y_train)

c) Predicting with KNeighborsClassifier Model:

After the model is trained it will be ready to be used for classification predictions. You can see the code example below:

yhat = KNC.predict(X_test)

KNeighborsClassifier can be optimized by adjusting its hyperparameters. Tuning offers plenty of options to make kNN algorithm implementations more accurate, more efficient and more performant. See the related article if you are interested in optimizing kNN models:

Radius based kNN

2- RadiusNeighborsClassifier

Additionally you can use a radius based kNN classifier instead of k-Nearest. In this case classification will be based on a fixed radius parameter.

RadiusNeighborsClassifier can be used with a very similar process as described for kNeighborsClassifier above except you will need to create a RadiusNeighborsClassifier initially instead of kNeighborsClassifier.

You can use a Python code similar to below for that:

from sklearn.neighbors import RadiusNeighborsClassifier

RNC = RadiusNeighborsClassifier()

kNeighborsClassifier vs RadiusNeighborsClassifier

The main difference between kNeighborsClassifier and RadiusNeighborsClassifier is what they make their classification based on. While former’s classification is based on k amount of closest neighbors, latter’s classification is based on a fixed radius.

This difference has some consequences. k-Nearest Neighbors Algorithm will classify every sample point since it evaluates nearest neighbors for all sample points. Radius Neighbors on the other hand will leave out sample that can not be reached by the radius value for classification.

RadiusNeighborsClassifier can be used with a very similar process as described for kNeighborsClassifier above except you will need to create a RadiusNeighborsClassifier initially instead of kNeighborsClassifier.

kNeighborsClassifier

- n_neighbors : Instead of radius, kNeighborsClassifier classifies based on k amount of nearest neighbors,

- outlier_label : N/A. This parameter doesn’t exist in kNeighborsClassifier, since even outliers will be classified based on their nearest negihbors.

RadiusNeighborsClassifier

- radius : This parameter is unique to RadiusNeighborsClassifier and it is 1.0 by default. Classification will be based on this fixed radius value.

- outlier_label : Since RadiusNeighborsClassifier operates based on a fixed radius value outliers will end up unclassified. This can be useful in Anomaly Detection applications.

outlier_label

As a result both classifiers have a couple of parameters that are unique to them.

- outlier_label parameter is used to define a label value for “unlabeled” outlier samples. It is None by default but it can be assigned to a custom string such as: “outlier”.

- Additionally, outlier_label can be assigned to most_frequent which will assign outlier values to most frequent labels automatically.

KNeighborsClassifier Summary

In this kNN tutorial we have seen how to create, train and predict with a kNN classifier model using Scikit-Learn and Python.

Additionally we have seen an alternative implementation called RadiusNeighborsClassifier which has certain benefits in comparison to KNeighborsClassifer when outlier management is critical.

You can find a more practical kNN example below along with other similar algorithms that are definitely worth exploring.

- kNN Examples: Iris

- Random Forest Machine Learning Model

- Logistic Regression Machine Learning Model

- Naive Bayes Machine Learning Model